November 1st, 2010

Web crawlers: each member of the SQ functions like an agent that crawls the depths of the web, starting all from the same seed. When the a crawler finds and empty slot in the queue of URLs, it stops. Parallel crawlers.

Web Crawler essentials:

§ Web crawlers are programs that exploit the graph structure of the Web to move from page to page. In their infancy such programs were also called wanderers, robots, spiders, fish and worms.

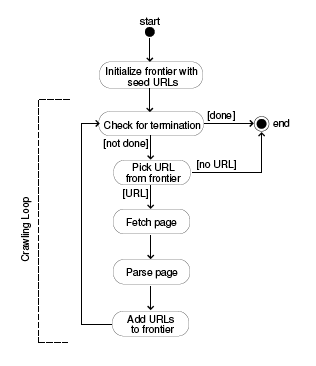

§ The crawler maintains a list of unvisited URLs called frontier.

§ The list is initialized with seed URLs.

§ Each crawling loop involves:

a) picking the next URL to crawl from the frontier,

b) fetching the page corresponding to the URL through HTTP,

c) parsing the retrieved page to extract the URLs and application specific information, and

d) finally adding the unvisited URLs to the frontier.

§ Before the URLs are added to the frontier they may be assigned a score that represents the estimated benefit of visiting the page corresponding to the URL.

§ The crawling process may be terminated when a certain number of pages have been crawled.

§ If the crawler is ready to crawl another page and the frontier is empty, the situation signals a dead-end for the crawler. The crawler has no new page to fetch and hence it stops.

§ The crawl history is a time-stamped list of URLs that were fetched by the crawler: it shows the path of the crawler through the Web starting from the seed pages.

§ At times a crawler may encounter a spider trap that leads it to a large number of different URLs that refer to the same page.

§ The code associated with the frontier can make sure that every consecutive sequence of k (say 100) URLs, picked by the crawler, contains only one URL from a fully qualified host name (e.g. ww.cnn.com). As side-effects, the crawler is polite by not accessing the same Web site too often, and the crawled pages tend to be more diverse.

§ The Robot Exclusion Protocol provides a mechanism for Web server administrators to communicate their file access policies; more specifically to identify files that may not be accessed by a crawler. This is done by keeping a file named robots.txt under the root directory of the Web server. This file provides access policy for different User-agents (robots or crawlers).

§ Once a page has been fetched, we need to parse its content to extract information that will feed and possibly guide the future path of the crawler.

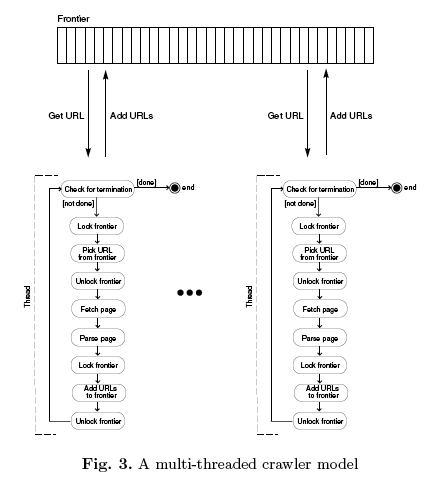

§ Multi-threading, where each thread follows a crawling loop, can provide reasonable speed-up and efficient use of available bandwidth.

Flow of a basic sequential crawler:

Flow of a basic multi-threaded crawler: